tl;dr

A Nutanix cluster can persist a replicated write across two nodes in around 250 uSec which is critical for single-threaded DB write workloads. The performance compares very well with hosted cloud database instances using the same class of processor (db.r5.4xlarge in the figure below). The metrics below are for SQL insert transactions not the underlying IO.

The problem with single threaded DB applications

There is a lot of badly written SQL in the world which issue a commit on every single insert into a database. Often the code is embedded in off-the-shelf applications which means that infrastructure teams cannot modify it easily or legally. These sorts of apps can be difficult to move to modern cloud infrastructure.

Such code puts a strain on the storage layer because it forces a synchronous IO for every insert. And since there is no concurrency (typically a single IO stream) the entire DB can only go as fast as the storage can persist a single write.

Traditional HA-Pair storage works great for such a scenario because on-prem storage typically use some sort of NVRAM and a dedicated interconnect between the controller heads.

With Nutanix HCI, things are a bit different because it’s a scalable cluster. However, there are a lof of simularities. Rather than NVRAM we can write to NVME or SSD storage, which can be done in less than 100 uSec. By using RDMA and RoCE interconnects – we can create low-latency interconnects too.

It turns out that even using older hardware (Skylake class processors) the Nutanix environment can achieve an insert latency of < 0.5ms with RF2 (persisting to NVMe or SSD across two nodes). This compares well to hosted SQLserver performance on AWS.

We are able to do this because the bulk of the replication happens in parallel. Once the hypervisor sends an IO to the Nutanix CVM, the data is written to the local media, and sent across the wire to a remote CVM in parallel. The write is only ever acknlowedged by the CVM when two (or more) copies are resident on persistent media (NVME Flash or SSD)

Experiment

The above experiment was performed using some SQL code I found on the internet. To keep it simple I insert a string and a random part to try and avoid creating overly compressable data. I based it on this original code from Stackoverflow.

use inserttestdb;

set nocount on;

declare @start datetime = getutcdate();

declare @i int = 0;

while @i < 100000

begin

INSERT INTO table1 (

col1,col2)

VALUES('logmydata',(SELECT RAND()*(10-5)+5));

set @i = @i+1;

end

select datediff(ms, @start, getutcdate());

All this code does is to insert the string ‘logmydata’ and a random number into a table, but because the SQL is wrapped in ‘begin‘ and ‘end‘ statements – each insert is a single transaction which must be commited before the next insert can start. The code loops around 100,000 times and gives a time for the time taken for the entire loop to execute.

Result

The result (total time taken to insert 100,000 rows) is 38350msec (38 seconds). So each insert took 38350/100000=0.3835 msec or 383.5uSec. Which in turn gives a rate of 2,597.4 inserts per second.

Where is the latency?

Intuitively we expect the bulk of the latency to be in the storage layer. There is very little SQL code being executed and we expect a storage write for every single insert (since every insert is also a complete transaction which must be commited to the database log file on persistent storage).

Storage workload profile

Since Nutanix is providing the storage layer I can observe the IO profile from the storage side. I have a separate disk for my database log file so I can be sure that what I am looking at is just the transaction log IO.

The metrics above tell us the the storage is seeing

- 2,500 IOPS per second – which is very close to the Insert rate of 2,597/s

- The randomness is 0% – whis is expected because DB transaction logs are written to sequentially

- There are no reads, only writes – this is expected because transaction logs are usually only read during recovery

Now what about the IO size. We know that the DB will commit every transaction to disk – and each insert is only a few bytes, so we expect the IO size to be very small.

Almost all the IO’s are 4KB in size, which is small but certainly not the handful of bytes that we inserted into the database. It turns out that 4KB is the page size used by Intel x86. We are writing through a filesystem and this is typically done as an entire page. With that in mind 4KB makes sense as the IO size.

Finally we want to know the IO latency that the CVM is responsible for. There are a lot of layers involved (SQL->Windows OS->NTFS->Virtual Disk->Hypervisor) before the CVM even sees the IO.

Now, this is where things get interesting. As far as the CVM is concerned – it is returning the write (replicated across two CVMs, down to NVMe) in around 160 microseconds 0.160 milliseconds. But our database inserts take 385 uSec – more than twice that number. Some investigation is needed.

Latency breakdown investigation

Rather than trying to trace a single IO through the entire system – we can make some approximations with a few experiments.

We can use some storage IO testing tools to abstract the overhead of SQL and see what sort of latency we get from Windows

We know from the IO profile above that the log disk sees 4KB IO’s sequential with a single outstanding IO. We can tell it’s a single outstanding IO using littles law which tells us if the latency is 385 uSec and the throughput is 2,597 IOPS, there is only one outstanding IO. Using fio and diskspd we observe the following respnse times for the 4KB write.

| IO Generator | 4KB latency |

| Windows fio | 280 uSec |

| Windows diskspd | 258 uSec |

So far it looks like going from a Windows Guest, through the filesystem, virtual device driver and hypervisor CVM connection takes around 100 uSec because diskspd is seeing ~260 uSec at the guest level and the CVM is delivering the IO back to the hypervisor in 160 uSec. This feels like a large gap, but it’s what the data tells us.

But there is still a large gap between 385 uSec seen by SQL server and ~260 that it takes to get a 4KB write done.

We would suspect that although the insert time is probably dominated by the IO latency there is probably a bit more happening than just disk IO

The above was captured when the SQL insert benchmark was running. In it we see that for the Physical disk containing the log – the disk was busy about 78% of the time. This means that 22% of the time was doing something else. We can then estimate that the IO latency for the inserts is 78% of the total time – this host is doing nothing apart from running the benchmark.

78% of 385 uSec is 300 uSec, 22% of 385 uSec is 84.7 uSec

So the breakdown looks like this

| Layer | Latency Contribution |

| Non IO (SQL+OS code execution) | 84.7uSec. (22% of 385 uSec) |

| IO Latency between SQL and Windows | 42.3 uSec. (78% of 385 uSec – 258 uSec [diskspd 4K]) |

| IO Latency of a 4KB write from Windows to CVM | 94 uSec (258 uSec -164 uSec from UVM) |

| Latency of CVM replicated write | 164 uSec |

| Total | 385uSec. (84.7+42.3+94+164) |

This is a very rough estimate, but I do know from previous experiments that a 4KB write replicated across two CVMs and returened to the guest VM is in the region of 200 uSec or so when using Linux and a raw disk – so ~258 from Windows through a filesystem seems reasonable. The latency of the SQL pieces are a bit of a guess, but look reasonable.

Summary

So, what have we learned?

Despite not having NVRAM, dedicated storage hardware and interconects a Windows guest running on Nutanix hardware (older Skylake HW at that) can persist a 4KB write across 2 CVMs down to flash media in around 260 uSec.

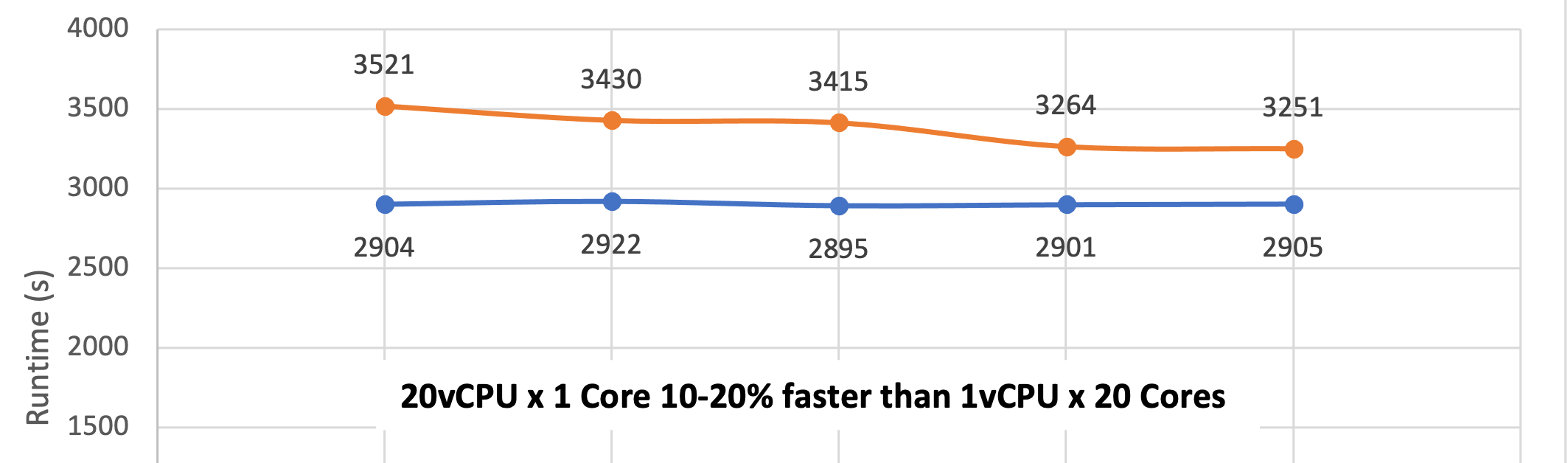

When measured from a SQLServer instance the performance for the class of “badly written SQL” that we started by discussing compares very well to hosted databses on hyperscale public clouds – in this case AWS. In the examples here for the same class of processors (Skylake on my Nutanix cluster and instance type r5.4xlarge) the transaction rate is about double on my Nutanix cluster on-prem.

Raw results and scripts

fio and diskspd results

fio

fio script

[global] ioengine=windowsaio direct=1 time_based runtime=60s size=10g [log] rw=write bs=4k iodepth=1 filename=I\:fiofile

fio result

log: (groupid=0, jobs=1): err= 0: pid=7992: Tue May 10 10:38:49 2022

write: IOPS=3658, BW=14.3MiB/s (14.0MB/s)(857MiB/60001msec)

slat (usec): min=8, max=1391, avg=10.45, stdev= 4.54

clat (usec): min=182, max=63906, avg=261.49, stdev=324.86

lat (usec): min=192, max=63928, avg=271.94, stdev=325.20

clat percentiles (usec):

| 1.00th=[ 210], 5.00th=[ 217], 10.00th=[ 221], 20.00th=[ 225],

| 30.00th=[ 231], 40.00th=[ 235], 50.00th=[ 239], 60.00th=[ 245],

| 70.00th=[ 251], 80.00th=[ 265], 90.00th=[ 285], 95.00th=[ 326],

| 99.00th=[ 603], 99.50th=[ 799], 99.90th=[ 3949], 99.95th=[ 4228],

| 99.99th=[ 6980]

bw ( KiB/s): min=10618, max=16419, per=97.55%, avg=14275.03, stdev=885.44, samples=119

iops : min= 2654, max= 4104, avg=3568.36, stdev=221.34, samples=119

lat (usec) : 250=67.93%, 500=30.66%, 750=0.79%, 1000=0.30%

lat (msec) : 2=0.10%, 4=0.12%, 10=0.09%, 20=0.01%, 50=0.01%

lat (msec) : 100=0.01%

diskspd

diskspd script

diskspd.exe -c32G I:\sqllog.dat diskspd.exe -w100 -Zr I:\sqllog.dat diskspd.exe -w100 -r -b4k -Su -d10 -o1 -L I:\sqllog.dat

diskspd result

Command Line: diskspd.exe -b4k -d120 -L -o1 -t1 -s -w100 -Sh -n I:\sqllog.dat

Input parameters:

timespan: 1

-------------

duration: 120s

warm up time: 5s

cool down time: 0s

affinity disabled

measuring latency

random seed: 0

path: 'I:\sqllog.dat'

think time: 0ms

burst size: 0

software cache disabled

hardware write cache disabled, writethrough on

performing write test

block size: 4KiB

using sequential I/O (stride: 4KiB)

number of outstanding I/O operations per thread: 1

threads per file: 1

IO priority: normal

System information:

computer name: WIN-VS182BVA7K7

start time: 2022/05/27 12:47:57 UTC

Results for timespan 1:

*******************************************************************************

actual test time: 120.00s

thread count: 1

proc count: 16

CPU | Usage | User | Kernel | Idle

-------------------------------------------

0| 0.53%| 0.27%| 0.26%| 99.47%

1| 0.05%| 0.03%| 0.03%| 99.95%

2| 0.13%| 0.07%| 0.07%| 99.87%

3| 6.56%| 0.52%| 6.04%| 93.44%

4| 0.04%| 0.03%| 0.01%| 99.96%

5| 0.05%| 0.00%| 0.05%| 99.95%

6| 0.07%| 0.05%| 0.01%| 99.93%

7| 0.04%| 0.01%| 0.03%| 99.96%

8| 0.07%| 0.05%| 0.01%| 99.93%

9| 0.04%| 0.01%| 0.03%| 99.96%

10| 0.18%| 0.01%| 0.17%| 99.82%

11| 0.14%| 0.07%| 0.08%| 99.86%

12| 0.08%| 0.04%| 0.04%| 99.92%

13| 0.12%| 0.05%| 0.07%| 99.88%

14| 0.03%| 0.01%| 0.01%| 99.97%

15| 0.29%| 0.16%| 0.13%| 99.71%

-------------------------------------------

avg.| 0.53%| 0.09%| 0.44%| 99.47%

Total IO

thread | bytes | I/Os | MiB/s | I/O per s | AvgLat | LatStdDev | file

--------------------------------------------------------------------------------------------------

0 | 1899958272 | 463857 | 15.10 | 3865.45 | 0.258 | 0.332 | I:\sqllog.dat (32GiB)

---------------------------------------------------------------------------------------------------

total: 1899958272 | 463857 | 15.10 | 3865.45 | 0.258 | 0.332

Read IO

thread | bytes | I/Os | MiB/s | I/O per s | AvgLat | LatStdDev | file

---------------------------------------------------------------------------------------------------

0 | 0 | 0 | 0.00 | 0.00 | 0.000 | N/A | I:\sqllog.dat (32GiB)

---------------------------------------------------------------------------------------------------

total: 0 | 0 | 0.00 | 0.00 | 0.000 | N/A

Write IO

thread | bytes | I/Os | MiB/s | I/O per s | AvgLat | LatStdDev | file

---------------------------------------------------------------------------------------------------

0 | 1899958272 | 463857 | 15.10 | 3865.45 | 0.258 | 0.332 | I:\sqllog.dat (32GiB)

---------------------------------------------------------------------------------------------------

total: 1899958272 | 463857 | 15.10 | 3865.45 | 0.258 | 0.332

SQL

Create the test table

USE [inserttestdb] GO SET ANSI_NULLS ON GO SET QUOTED_IDENTIFIER ON GO CREATE TABLE [dbo].[table1]( [col1] [nchar](10) NULL, [col2] [bigint] NULL ) ON [PRIMARY] GO

Test Script

use inserttestdb;

set nocount on;

declare @start datetime = getutcdate();

declare @i int = 0;

while @i < 100000

begin

INSERT INTO table1 (

col1,col2)

VALUES('logmydata',(SELECT RAND()*(10-5)+5));

set @i = @i+1;

end

select datediff(ms, @start, getutcdate());

Really cool breakdown Gary.