VM CPU Topology

The topology (layout) that AHV presents virtual Sockets/CPU to the guest operating system will usually be different than the physical topology. This is expected because we typically present a subset of all cores to the guest VMs.

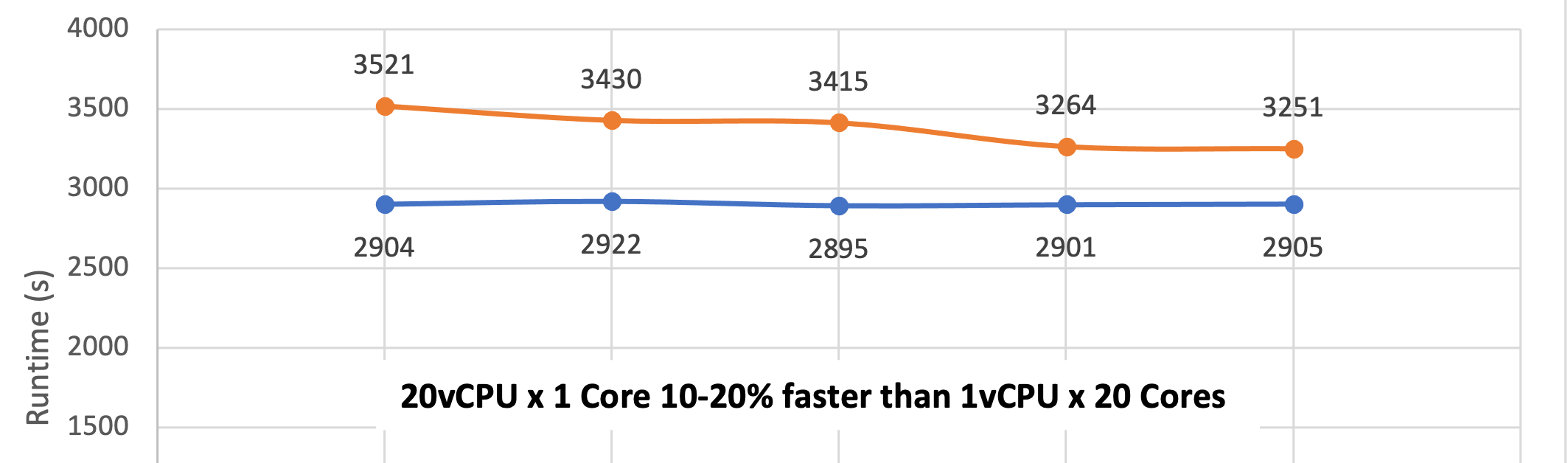

Usually it is the total number of vCPU given to the VM that matters, not the specific topology, but in the case of SQLserver running an analytical workload (a TPC-H like workload from HammerDB) the topology passed to the VM does make a difference. Between 10% and 20% when measured by the total runtime.

[I think that the reason we see a difference here is that (a) the analytical workloads use hardly any storage bandwidth (I sized the database to fit in memory) and (b) there is probably a lot of cross-talk between the cores/memory as the DB engine issues parallel queries.]

At any rate we see that passing 20 cores as “20 sockets of 1 core” beats the performance of “1 socket with 20 cores” by a wide margin. The physical topology is two sockets of 20 cores on each socket. Thankfully the better performing option is the default.

Available constructs in AHV (KVM QEMU)

Example 16 CPUs allocated by AHV

AHV uses two constructs when passing CPU and sockets to the guests.

- vCPU(s) – An unfortunate name, really this is the number of sockets passed to the guest

- Number of cores Per vCPU. How many cores per socket that the guest sees.

Using these two constructs the AHV could pass up

- 16 sockets with 1 CPU per socket

- 1 socket with 16 CPU on a single socket

- 4 sockets with 4 CPU per socket

- 2 sockets with 8 CPU per socket

etc.

How do these appear to the guest (Windows 2016)

16 sockets 1 core per socket

Taking for example the first config. 16 sockets with 1 CPU per socket

1 socket, 16 cores on single socket

The opposite config, a single socket with 16 cores

Effect on guest performance

The hypervisor sees each guest vCPU as a thread – and from that perspective, KVM/QEMU/Linux does not really care about how the cores are presented to the guest. For the time being we will leave NUMA aside and assume that the number of cores presented to the guest is <= the number of physical cores on a single physical socket.

However, it seems that the guest does care about how the cpus are presented – or put another way, the guest will make scheduling decisions based on how it thinks the cores are laid out. Here is an example with Windows OS and SQL server servicing HammerDB TPC-H workload.

| HammerDB Driver Setup | HammerDB vUser Config |

|---|---|

|  |

|  |

In this experiment the SQL server is configured with 20 vCPU (there are 20 real cores on the each physical socket) and the DOP (Degree Of Parallelism) is also set to 20. So in this experiment we can expect some coordination between threads as they carve up the query across cores.