We have started seeing misaligned partitions on Linux guests runnning certain HDFS distributions. How these partitions became mis-aligned is a bit of a mystery, because the only way I know how to do this on Linux is to create a partition using old DOS format like this (using -c=dos and -u=cylinders)

$ sudo fdisk -c=dos -u=cylinders /dev/sdc

When taking the offered defaults, the result is that the partition begins 1536 Bytes into the (virtual disk) – meaning that all writes appear offset by 1536. e.g. a 1MB write that is intended to be at offset 104857600 is actually sent to the back-end storage as 104859136. Typically underlying storage is aligned to 4K at a minumum, and often larger. In terms of performance this means that every write that should simply overwrite the existing data needs to read the first 1536 bytes, then merge the new data. On SSD systems this results in CPU increase to do the work – on HDD systems the HDD’s themselves become busier – and throughput reduces – sometimes drastically. In the experiment below, I can achieve only 60% of the performance when mis-aligned.

Am I mis-aligned?

The key to telling is to look at the partition start offset. If the offset is 1536B – then yes.

$ sudo parted -l Model: VMware Virtual disk (scsi) Disk /dev/sda: 2199GB Sector size (logical/physical): 512B/512B Partition Table: msdos Number Start End Size Type File system Flags 1 1536B 2199GB 2199GB primary

Performance Impact

fio shows the thoughput to a misaligned partition as almost half of the correctly aligned partition.

Aligned:

fio-2.20-27-g8dd0e Starting 4 processes wr1: (groupid=0, jobs=4): err= 0: pid=1515: Wed May 24 17:32:54 2017 write: IOPS=210, BW=211MiB/s (221MB/s)(8192MiB/38913msec) slat (usec): min=42, max=337, avg=114.38, stdev=26.72

Misaligned:

fio-2.20-27-g8dd0e Starting 4 processes wr1: (groupid=0, jobs=4): err= 0: pid=1414: Wed May 24 17:40:51 2017 write: IOPS=129, BW=129MiB/s (135MB/s)(8192MiB/63472msec) slat (usec): min=38, max=243, avg=111.12, stdev=26.75

iostat shows interesting/weird behavior. What’s curious here is that while we do see the 60% delta in wsec/s the await, r_await and w_await would completely throw you off, without knowing what’s happening. In the misaligned case – the w_await is lower than the aligned case. That cannot be true. It’s as if the await time average went down (which is expected since with misalignement, we do a small read for every large write) and the r_await and w_await are simply calculated pro-rata from the r/s and w/s.

Aligned: (IO destined for /dev/sd[cdef])

Misaligned (IO destined for /dev/sd[cdef])

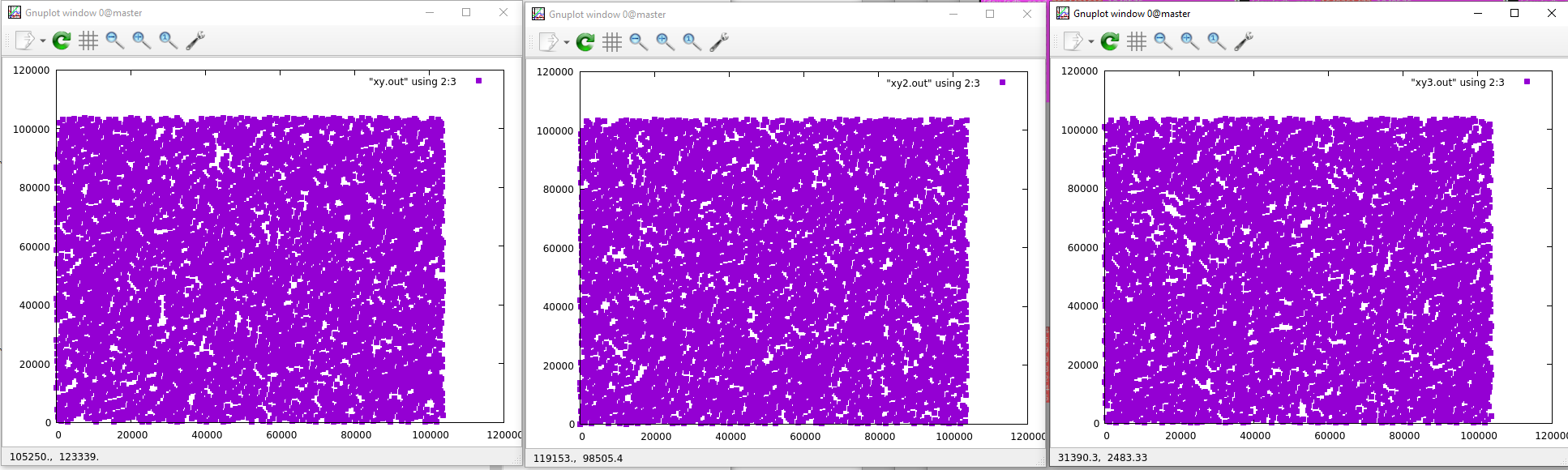

Observing in Nutanix: Correctly Aligned (http://CVMIP:2009/vdisk_stats)

Observing in Nutanix: Misaligned (http://CVMIP:2009/vdisk_stats)